The birth of applied artificial intelligence is upon us. Conceived more than 60 years ago, its long gestation has finally produced the sort of offspring expected to usher in transformative progress in every area of business and life.

It’s quite the baby shower; the world is set to pour $35 billion into its crib in 2019, according to IDC – a 44% surge from the year before. Gifts proffered lavishly in the hope they’ll be returned in the future with interest. But, as Charles Dickens pointed out long before anyone thought to apply an algorithm to his e-books, corrupted youth and great expectations beget serious challenges.

The duty now is to nurture the infant technology in a way that ensures it doesn’t adolesce into a spoilt, sociopathic ingrate, inhaling our money only to embarrass us in public. Thoughts have turned to ensuring AI matures into a trustworthy, productive and reliable friend rather than a malignant source of new problems, be those for businesses attempting to exploit AI or for society more generally.

In either case, perhaps the best place to start is to stop starting badly. At least this is the view of Simon Driscoll, Data & Intelligence Practice Lead in the UK for Japanese IT services giant NTT Data. Driscoll has a practitioner’s wisdom when it comes to effective data strategy implementations and now, through NTT Data’s newly-unveiled guidelines for the stewardship of AI, a prescription for the future.

His 25 years at the data management coalface – from Vodafone through BAE Systems to Deloitte before arriving at NTT Data late last year – informs a rationalist perspective on the steps required to implement productive AI applications. He says the most common problem he encounters is that organisations keen to implement AI often make the mistake of beginning their journey by implementing AI.

“I think we’re in a hype curve at the moment. Everybody wants to focus on exploitation of data, because that’s where the hype is, that’s where AI sits. Everybody wants to buy AI.” he says.

The AI feeding frenzy – or “peak AI hype curve” as Driscoll puts it – is leading to lofty promises from an ever-expanding range of vendors, all of whom attest to their ability to jump off the shelf and save companies vast amounts of time and money. But he suggests it’s a rush to bring the baby home before properly stocking the cupboards and preparing the nursery.

“Today, I do not believe that the majority of organisations have recognised that data is an absolutely critical business asset and it needs to be treated as such. What this means is that you come back to AI falling victim to that garbage in, garbage out situation.”

A painfully learned truth is that advanced AI solutions can be attempted but ultimately thwarted by applying it to data that can’t support it. It’s a fact borne out by a recent IDC survey that suggests a full quarter of companies report a 50% failure rate in their AI initiatives.

Driscoll adds: “All the conversations my clients want to have with me and my teams are all about AI or data exploitation. And it’s great, because that’s a great conversation starter. However, what we actually find if you go through the traditional process of doing a proof of concept, a proof of value, then into productionisation, is that many of those exercises do not get to the productionisation stage.”

Stalled progress of AI implementations is not necessarily the fault of the analytics or AI tools themselves – it’s because of legacy systems, siloed data sources, a lack of integration and chaotic, uncleansed data. Applying AI is therefore the end point of a process that should have properly begun much earlier, addressing the sourcing of data, rationalising its treatment and understanding how it will be made available.

“I guess it’s a little bit of a personal frustration. Data is not new. Many of the conversations I actually have with my clients today go back to the same things we’ve been talking about for the last 10, 15, 20 years.

“AI is not a simple solution. It takes time. It takes investment. I think the implementation of AI takes far more time than lots of organisations believe, or are led to believe.”

Today, I do not believe that the majority of organisations have recognised that data is an absolutely critical business asset

Driscoll is tackling these issues with clients across a wide range of sectors with NTT Data. In the UK, the company focuses on telecoms and media, public services, the insurance sector and manufacturing. In doing so it offers data intelligence services from architecting and build, through to data management and exploitation.

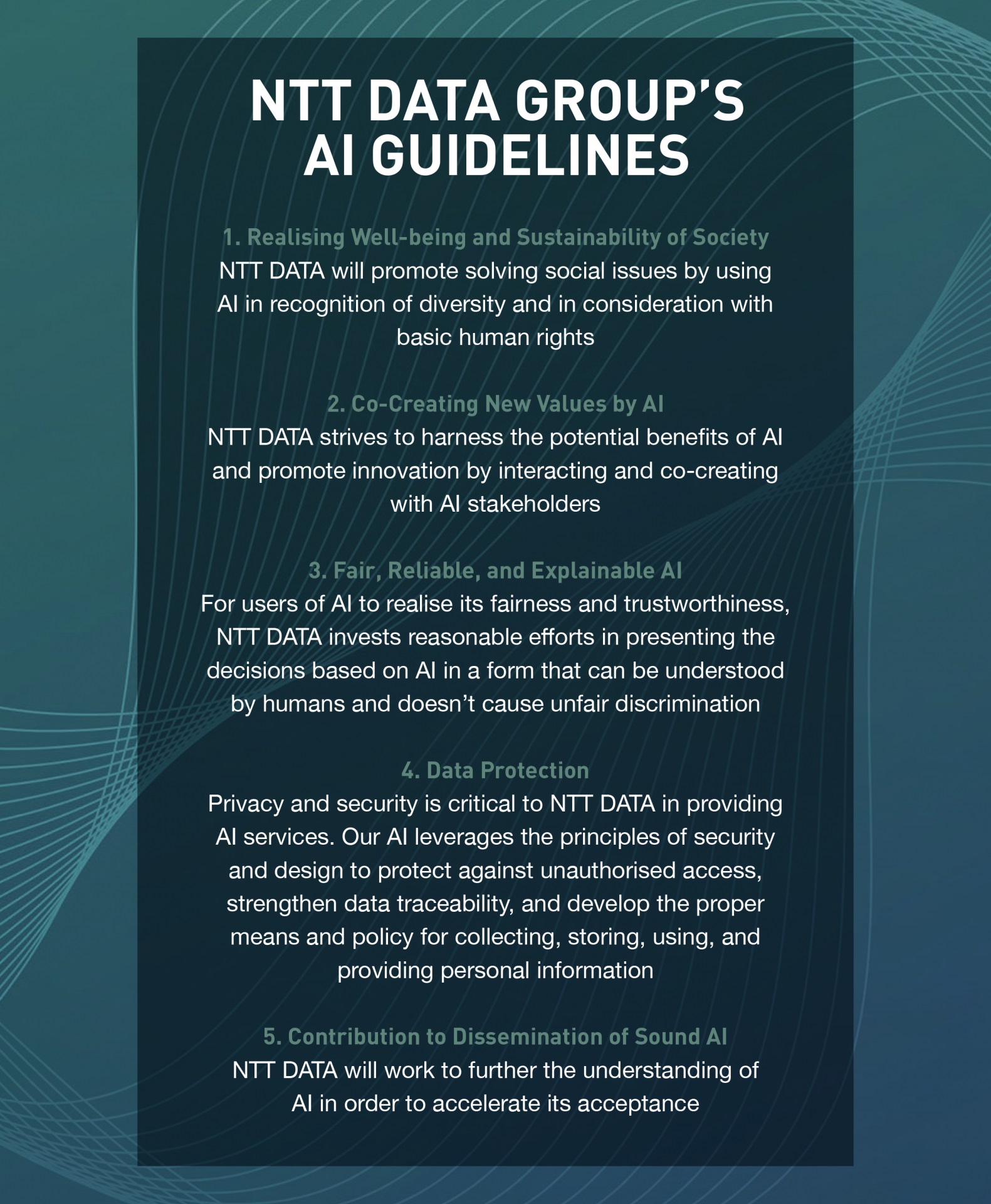

It’s the exploitation element that’s driving a lot of the buzz around AI, and as the company levers more clients in that direction it has formulated a global policy framework to help guide the world’s conversation about how the technology should be governed.

“That is what has driven our ethical guidelines. Five very clear principles around how we want to ensure that AI is recognised as valuable to society in general.”

These principles, revealed in May this year, codify a set of values for the company that aim to ensure it contributes to a society where the positive outcomes of AI are widely accessible, while potential downsides, particularly social discrimination through unwitting (or deliberate) input bias, are reduced.

“What I am finding really refreshing is these guidelines we’ve published – and this comes from our Japanese heritage – the whole organisation and the individuals within it absolutely believe in these. It’s not marketing hype,” Driscoll stresses.

The vision dictates that the company will work proactively with other players in the AI field to collaborate on developing AI with sustainability and human wellbeing at its heart. As Driscoll explains, NTT Data’s ethical guidelines are at their core a way to “ensure AI is not something that harms humans”. To do this it commits to educating both professionals and the public, to prioritising the respect and security of personal data, and to develop AI that is always explainable in its decision making.

This collaborative, educational approach is one Driscoll sees as vital for establishing trust in AI in the face of often querulous or misinformed mainstream media. Whether it’s Facebook evading accountability for the Cambridge Analytica scandal, Alexa’s alleged eavesdropping or Tesla’s latest autonomous fender-bender, bad news tends to overwhelm the good. But the positives are plentiful, if underrepresented; from NTT Data’s own work bringing AI to bear in hospital ICU units right the way through to the fact hundreds of millions of satisfied customers have bought AI-powered voice assistants because they actually are useful.

As AI advances, says Driscoll, industry players will need to become much better at fostering trust and working together to achieve it.

“If I look at the Big 5 – the ones who have the most to gain, and potentially the most to lose if an AI winter comes around – they should definitely be driving some of that conversation.

“I think Facebook are now starting to do things, but I think that’s because of the Cambridge Analytica thing. They’re managing the fallout as opposed to proactively talking about the benefits and talking about how they’re going to use AI in an ethical way. And I don’t think any of the others are being overt in how they are making AI ethical right now.”

Early evidence of NTT Data’s seriousness in this effort was the unveiling in May of its new Artificial Intelligence Centre of Excellence in Barcelona. The aim is to have trained at least 200 NTT Data staff in AI over the next 12 months.

But while Driscoll and his colleagues at NTT Data work to progress a global consensus around ethical AI, he is quick to rewind the conversation and point out that whatever we imagine of AI’s capabilities in the future, it will be informed by the data we feed it today.

In fact, he goes so far as to say the next 10 years will probably not be signified by any major leaps in AI technology itself – albeit he reserves healthcare as an outlying sector there. Rather, for business, it’s the human side of the equation that will either march it forwards or hold it back. Lawyers, governments and journalists will have as much to say on the topic as data scientists.

“We already have so many possibilities with AI that I don’t believe that AI itself will evolve massively over the next 10 years. It’s all the other elements that are required,” explains Driscoll.

“AI is absolutely dependent on data. AI can only learn from the data it is given. AI is not an island. It’s not a standalone. It requires this data – it requires us to ensure that the data that’s being used by AI adheres to all the regulations, but not just the regulations – all of the ethical uses of that data.”

We need to ensure that AI is seen as something which is really focused around society

Set during the technological upheaval of an earlier industrial revolution, Charles Dickens’ Great Expectations sees its main characters, orphans Pip and Estella, trained by surrogate parents to exact cold revenge on society. The result, explains Estella to her guardian: “I am what you designed me to be. I am your blade. You cannot now complain if you also feel the hurt.”

In the early years of life, an inconsistent or corrupted environment inevitably leads to fixed and irretrievable harm for us humans. The same could be said of AI. As Driscoll, and by extension NTT Data, make clear – a concerted, collaborative effort is now required to ensure AI is nourished with properly treated data, and that going forward it does so in accordance with a universally agreed set of honest, transparent and positive values.

“We need to ensure that AI is seen as something which is really focused around society,” he concludes. “We need to ensure that there is clarity around how AI is actually being driven.”