Augmented reality will play a major role in transforming the way we live and work. PwC’s recent report titled ‘Seeing is believing’ suggests that AR has the potential to add over $1 trillion to the global economy by 2030 and many crucial industries including manufacturing, healthcare, energy and retail stand to benefit from it. However there are two fundamental optical issues that are preventing us from fully harnessing its potential.

Unless you’re an ophthalmic expert, you probably have never heard of vergence accommodation conflict (VAC) and focal rivalry. According to a recent report from specialist AR/VR industry analysts at Greenlight Insights, these obscure but important optical facts are impacting 95% of all current AR applications – in a way that is blocking their true enterprise potential.

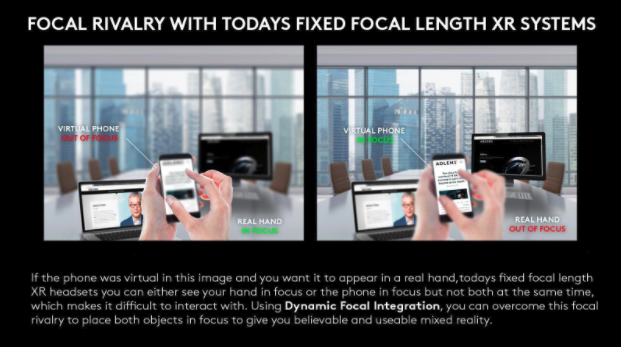

Focal rivalry prevents us from accurately placing virtual objects in real space

Focal rivalry impacts both believability and accuracy of mixed reality experiences and AR will struggle to realise its true potential if we can’t address it. Currently, when we are looking at objects through AR systems, our eyes must choose between seeing either virtual or real content clearly – we can’t focus on both. This is obviously a serious limitation for AR.

The University of Pisa has recently conducted a study exploring how focal rivalry affects people’s performance when using AR to complete precision tasks. The findings suggest that high-precision manual tasks may not be feasible with the current AR systems because of focal rivalry.

When AR is used for tasks such as surgery or engineering, focal rivalry will limit the usefulness of the technology. The inability to meaningfully integrate virtual content in the real world is holding us back from designing AR-assisted applications that require accurate virtual content overlaid on the real world.

VAC destroys user comfort and blocks close proximity applications

Vergence accommodation conflict decouples the natural vergence and accommodation responses in the eyes. As an object gets closer, our eyes naturally turn inwards (to triangulate on it) and this stimulates a change in focus. Because the lenses in an AR headset are set at a fixed distance from the eye, this natural response doesn’t happen.

When virtual objects are brought up close to you, the mismatching cues received by your brain cause discomfort and eye fatigue. This requires users to take frequent breaks from the device, effectively preventing AR systems from becoming all-day wearables.

VAC is also placing restraints on developers trying to create applications where virtual content can be interacted within arms reach. Microsoft is one of the most advanced AR headset manufacturers in the world but is currently stuck with advising content creators (in its developer guide) to place virtual content between 1.25m and 5m away from the user to ensure a comfortable mixed reality experience. This means that users will struggle with even the most basic tasks that require to be done in close proximity – such as reading.

Engaging all the visual cues requires a dynamic optical interface

There are 23 visual cues that make up our natural perception of 3D space in the real world. Once all the visual cues are engaged in an AR system we can deliver a truly immersive, believable and useful mixed reality experience – that includes bringing content comfortably into close proximity and placing digitally-rendered objects accurately and convincingly in the real world.

Eighteen of the visual cues can be dealt with by upgrading software and hardware but no amount of improvements in these fields will resolve issues like VAC and focal rivalry. Our eyes cannot be fooled by software – the remaining five visual cues can only be engaged through the optical interface, through systems like dynamic fluid lenses and light field displays.

AR headset manufacturers urgently need to integrate a dynamic optical interface system

Despite the market being primed for growth, it will be limited unless fundamental optical issues are resolved. The recent focus on upgrading software and computer hardware, though important, has meant that the optical interface has become an under-represented area of innovation. The only way to avoid visual fatigue and accurately and believably mix real and virtual content is to use a dynamic focus solution.

Greenlight Insights analysts reviewed all the key dynamic focus solution technologies currently in development for the AR sector, comparing their relative performance and likely time to market. A comprehensive comparison concludes that dynamic lens systems that can change the focal planes offer the best combination of addressing all the key issues. The analysts have also predicted that solving two fundamental optical issues would unlock an additional $10 billion in spending on enterprise AR applications by 2026.

By solving issues like VAC and focal rivalry we elevate the user experience of mixed reality devices – making them both comfortable enough for all-day use and also increasing their usefulness, particularly in enterprise scenarios.

John Kennedy

CEO of Adlens